R

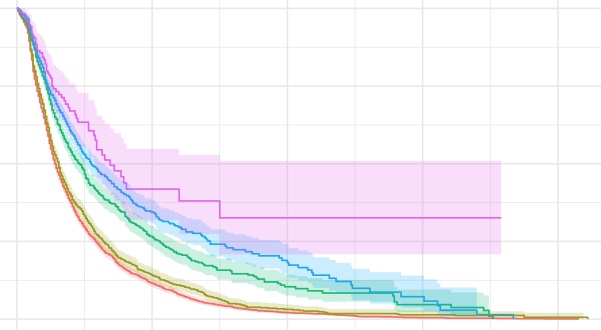

Survival Analysis 4: Cox proportional hazards model

This post will briefly share the derivation, estimation, assumption and application of the Cox proportional hazards (PH) model. In addition, it will also mention using ANOVA to test two nested models.

Survival Analysis 3: Non-Parametric Comparison of Survival Functions

This post is to share the two common non-parametric tests of comparing the survival functions: Log-Rank Test & Generalized Wilcoxon Test, as well as their corresponding calculations in the detailed process.

Survival Analysis 2: Non-Parametric Estimation of Survival Functions

Concepts of survival function estimations and corresponding calculations both manually and in R.

Causal Inference 4: Instrumental variable

Intromental variables (IV) is an alternative causal inference method that does not rely on the ignorability assumption.

Causal Inference 3: Inverse probability of treatment weighting, IPTW

In this post we will continue on discussing the estimate of causal effects. We will talk about intuition of IPTW, some key definitions like weighting, marginal structual models. And in the end we will show a data example in R.

Causal Inference 2: Propensity Score and Matching

In the Part 1 we talked about the basic concepts of causal effect and confounding. In this post we will proceeed with discussing about how to control the confounders with matching.

Causal Inference 1: Causal Effects and Confounding

Causal inference has been a heated field in statistics. It has great application for observational data. In this post I will shares some key concepts of causal inference:

- The confusion over causal inference

- The important causal assumptions

- The concept of causal effects

- Confounding and Directed Acyclic Graphs

Prediction of Children Anaemia Rate by LASSO

This post investigates the five factors that are related to anaemia in children by using the data collected from the World Health Organization. The method we will use is LASSO, which is a classic penalized regression. In this post we will see how LASSO filter out the variable for us and its prediction performance compared with our baseline model, linear regression.

To implement LASSO in R, the package I used is "glmnet".

Regression Tree, Random Forest and XGBoost Algorithm

Tree-based methods are conceptually easy to comprehend and they render advantages like easy visualization and data-preprocessing. It is a powerful tool for both numeric and categorical prediction. In this post I will introduce how to predict baseball player salary by Decision Tree and Random Forest from algorithm coding to package usage.

The EM Algorithm from Scratch

Expectation-maximization (EM) algorithm is a powerful unsupervised

machine learning tool. Conceptually, It is quite similar to k-means

algorithm, which I shared in this post.

However, instead of clustering through estimated means, it cluster

through estimating the distributions parameters and then evaluate how

likely is each observation belong to distributions. Another difference

is that EM uses soft assignment while k-means uses hard assignment.

Data Visualization with ggplot2

In this post I will share some frequently used ggplot2 commands when

making data visualization.

K-means Clustering Algorithm from Scratch

Modelling Daily Dow Jones Industrial Average by GARCH

GARCH is a well known model to capture the volatility in the data. It can be useful to deal with the financial or time series data. This blog will explain the model structure, intuition, application and evaluation.

Evaluate Wine by LSTM and Simple NN

This project is focused on solving the question: Is it possible to let the machine evaluate a wine like a sommelier?

The answer is yes! With the help of simple Neural Network and Long short-term memory(LSTM), we can make it possible.